The Sound of Simulation: Learning Multimodal Sim-to-Real Robot Policies with Generative Audio

Renhao Wang , Haoran Geng , Tingle Li , Feishi Wang , Philipp Wu , Trevor Darrell , Boyi Li , Pieter Abbeel , Jitendra Malik , Alyosha Efros

CoRL , 2025 (Best Paper Finalist) PDF / Project Page

Learn sim-to-real robot policies with generative audio.

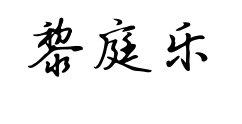

Sounding that Object: Interactive Object-Aware Image to Audio Generation

Tingle Li , Baihe Huang , Xiaobin Zhuang , Dongya Jia , Jiawei Chen , Yuping Wang , Zhuo Chen , Gopala Anumanchipalli , Yuxuan Wang

ICML , 2025

PDF / Project Page

Interactively generate object-specific sounds from visual scenes.

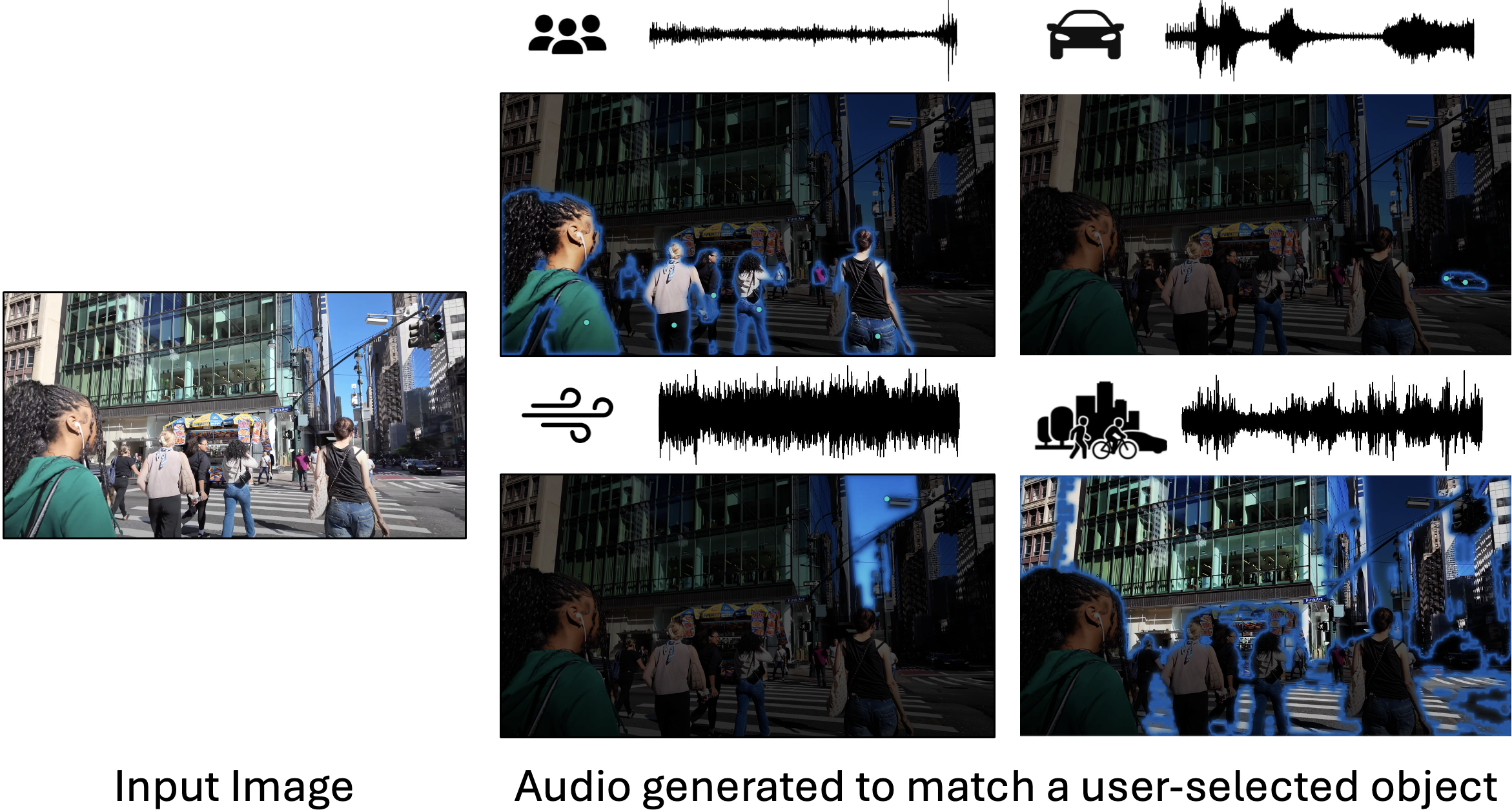

Audio Texture Manipulation by Exemplar-Based Analogy

Kan Jen Cheng , Tingle Li , Gopala Anumanchipalli

ICASSP , 2025

PDF / Project Page

Manipulate audio texture using exemplar-based analogy.

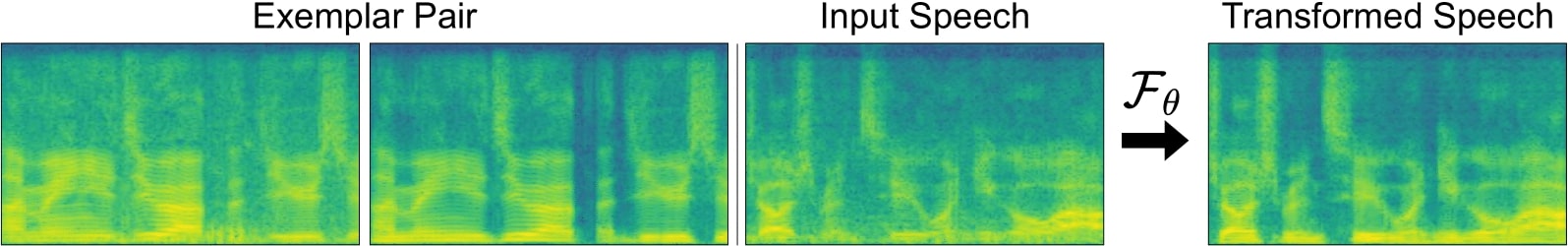

Self-Supervised Audio-Visual Soundscape Stylization

Tingle Li , Renhao Wang , Po-Yao Huang , Andrew Owens , Gopala Anumanchipalli

ECCV , 2024

PDF / Project Page

Restyle a sound to fit with another scene, using an audio-visual conditional example taken from that scene.

Deep Speech Synthesis from MRI-Based Articulatory Representations

Peter Wu , Tingle Li , Yijing Lu , Yubin Zhang , Jiachen Lian , Alan Black , Louis Goldstein , Shinji Watanabe , Gopala Anumanchipalli

Interspeech , 2023

PDF

We synthesize speech from MRI videos.

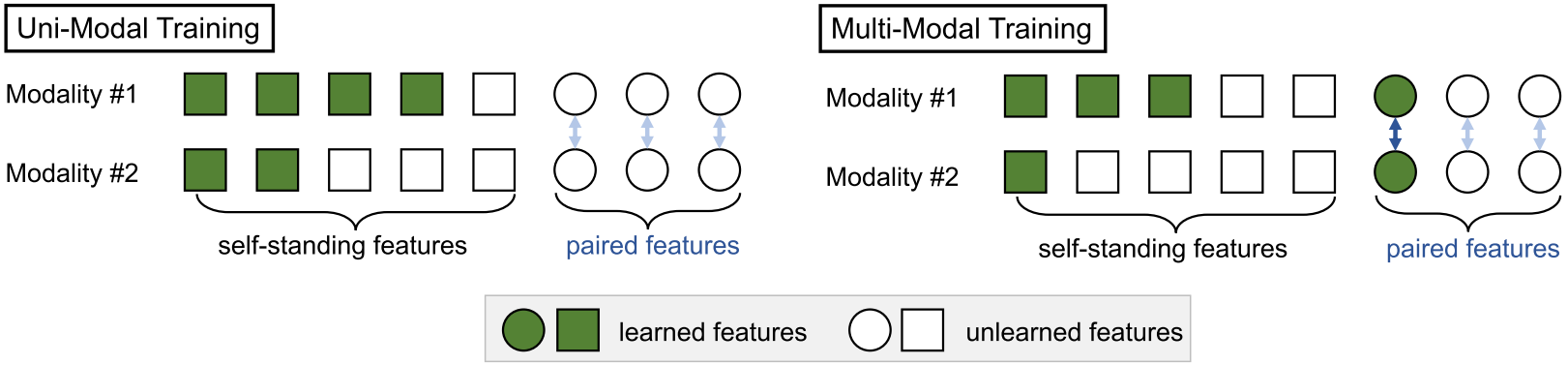

On Uni-Modal Feature Learning in Supervised Multi-Modal Learning

Chenzhuang Du , Jiaye Teng , Tingle Li , Yichen Liu , Tianyuan Yuan , Yue Wang , Yang Yuan , Hang Zhao

ICML , 2023

PDF

We enhance the generalization ability of multimodal models by categorizing the features into uni-modal and paired.

Learning Visual Styles from Audio-Visual Associations

Tingle Li , Yichen Liu , Andrew Owens , Hang Zhao

ECCV , 2022

PDF / Project Page / Code

We learn from unlabeled data to manipulate the style of an image using sound.

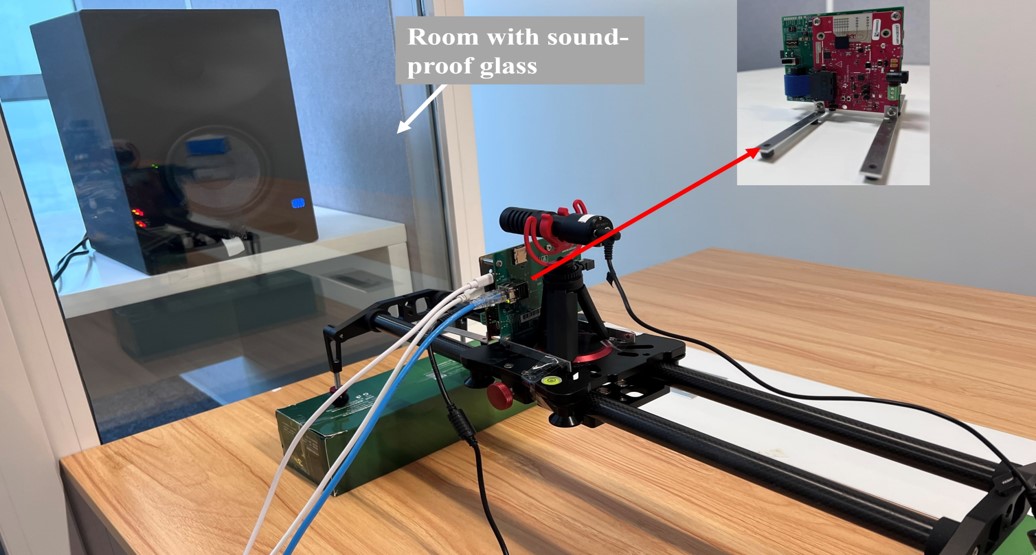

Radio2Speech: High Quality Speech Recovery from Radio Frequency Signals

Running Zhao , Jiangtao Yu , Tingle Li , Hang Zhao , Edith C.H. Ngai

Interspeech , 2022

PDF / Project Page

High-quality speech recovery system for millimeter-wave radar without deafness.

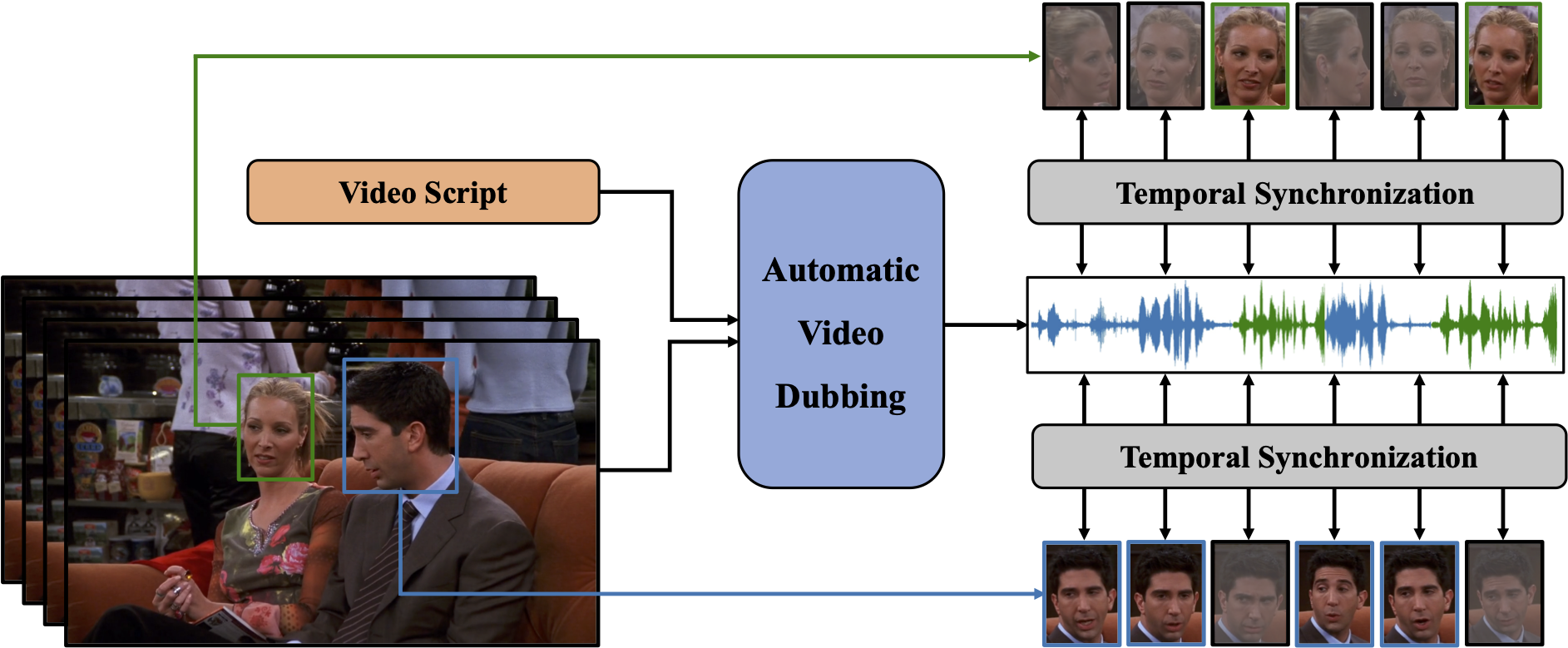

Neural Dubber: Dubbing for Videos According to Scripts

Chenxu Hu , Qiao Tian , Tingle Li , Yuping Wang, Yuxuan Wang , Hang Zhao

NeurIPS , 2021

PDF / Project Page / Press

Automatic video dubbing driven by a neural network.

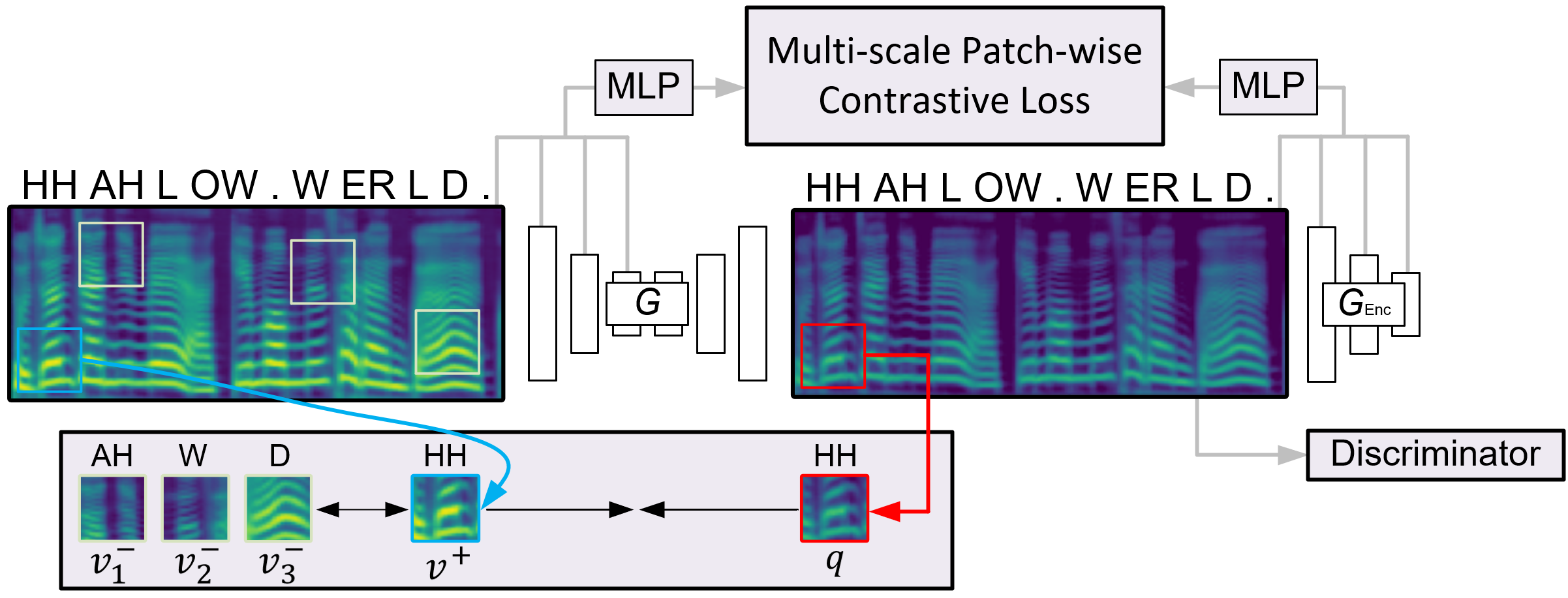

CVC: Contrastive Learning for Non-parallel Voice Conversion

Tingle Li , Yichen Liu , Chenxu Hu , Hang Zhao

Interspeech , 2021 (ISCA Student Travel Grant) PDF / Project Page / Code

One-way GAN training for non-parallel voice conversion.

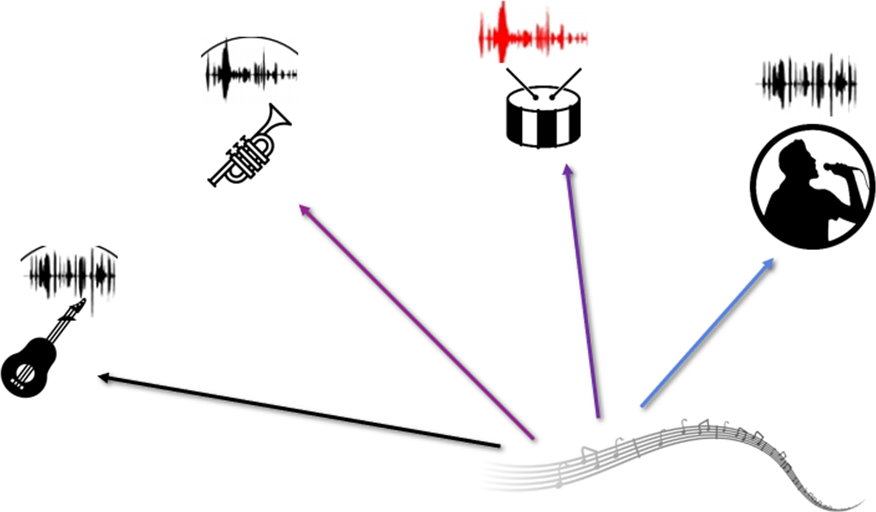

Sams-Net: A Sliced Attention-based Neural Network for Music Source Separation

Tingle Li , Jiawei Chen, Haowen Hou, Ming Li

ISCSLP , 2021 (Oral) PDF / Project Page

The scope of attention is narrowed down to the intra-chunk musical features that are most likely to affect each other.

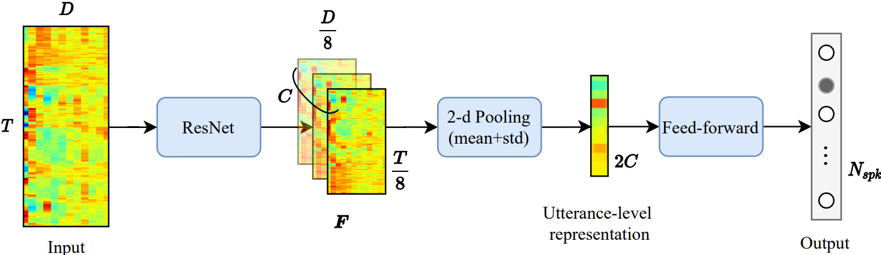

The DKU Speech Activity Detection and Speaker Identification Systems for Fearless Steps Challenge Phase-02

Qingjian Lin , Tingle Li , Ming Li

Interspeech , 2020

PDF / Leaderboard

SoTA performance for speech activity detection and speaker identification.

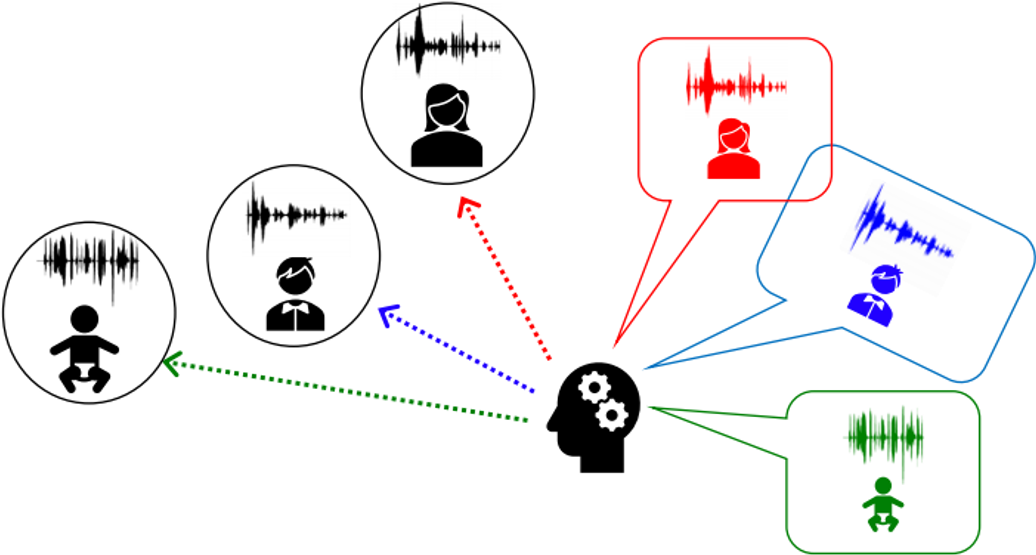

Atss-Net: Target Speaker Separation via Attention-based Neural Network

Tingle Li , Qingjian Lin , Yuanyuan Bao, Ming Li

Interspeech , 2020

PDF / Project Page

Adapted Transformer to the speech separation for more efficient and generalizable performance.

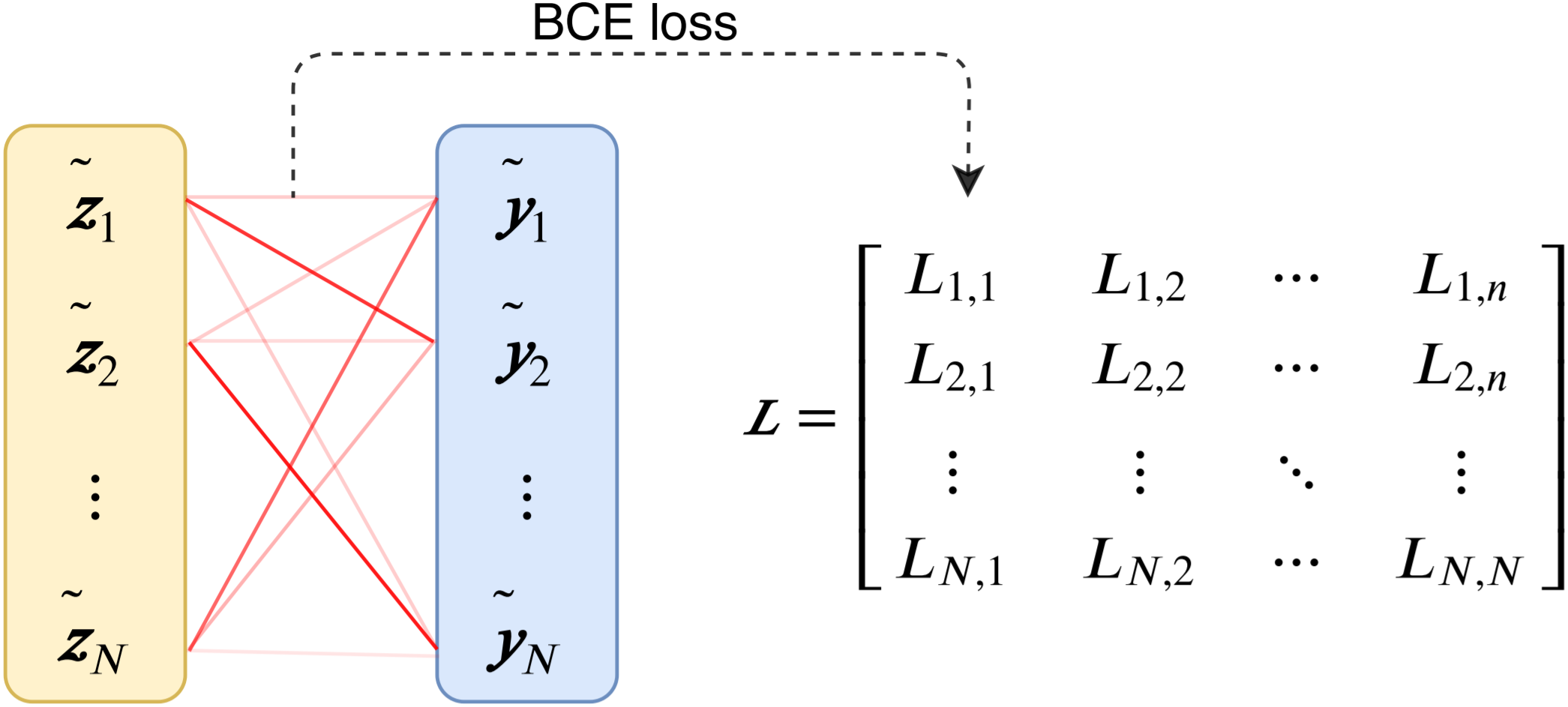

Optimal Mapping Loss: A Faster Loss for End-to-End Speaker Diarization

Qingjian Lin , Tingle Li , Lin Yang, Junjie Wang, Ming Li

Odyssey , 2020

PDF

A new mapping loss based on Hungarian algorithm that reduces time complexity while maintaining performance for speaker diarization.