CVC: Contrastive Learning for Non-parallel Voice Conversion

1IIIS, Tsinghua University 2Zhejiang University

3Shanghai Qi Zhi Institute

[PDF] [Code]

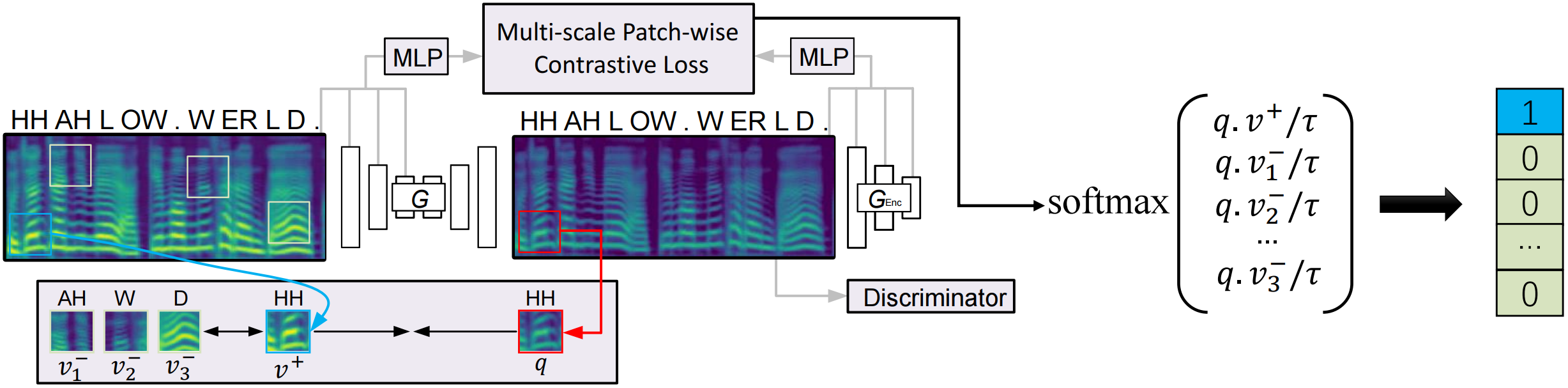

This webpage is to show some listening examples for our proposed CVC and the baseline CycleGAN-VC[1] and VAE-VC[2]. Compared to previous CycleGAN-based methods, CVC only requires an efficient one-way GAN training by taking the advantage of contrastive learning. When it comes to nonparallel one-to-one voice conversion, CVC is on par or better than CycleGAN and VAE while effectively reducing training time. CVC further demonstrates superior performance in many-to-one voice conversion, enabling the conversion from unseen speakers.

3-Minutes Demo Video

One-to-one Voice Conversion

Type:

- F-F: female-female conversion

- F-M: female-male conversion

- M-M: male-male conversion

- M-F: male-female conversion

| Type | Source | Proposed CVC | CycleGAN-VC[1] | VAE-VC[2] | Target | F-F | F-M | M-M | M-F |

|---|

Many-to-one conversion

Type:

- F-F: female-female conversion

- F-M: female-male conversion

- M-M: male-male conversion

- M-F: male-female conversion

Seen-source-speaker to seen-target-speaker conversion:

| Type | Source | Proposed CVC | Target | F-F | F-F | F-M | F-M | M-M | M-M | M-F | M-F |

|---|

Unseen-source-speaker to seen-target-speaker conversion:

| Type | Source | Proposed CVC | CycleGAN-VC[1] | Target | F-F | F-M | M-M | M-F |

|---|

Compared to one-to-one proposed CVC:

| Type | Source | CVC Many | CVC | Target | F-F | F-M | M-M | M-F |

|---|

Reference

[1] T. Kaneko, H. Kameoka, K. Tanaka, and N. Hojo, “CycleGAN-VC3: Examining and Improving CycleGAN-VCs for Mel-spectrogram Conversion,” in Interspeech, 2020, pp. 2017–2021.

[2] C-C. Hsu, H-T. Hwang, Y-C. Wu, Y. Tsao, and H-M. Wang, “Voice conversion from non-parallel corpora using variational auto-encoder,” in APSIPA, 2016, pp. 1–6.